We are thrilled to introduce Sora 2, the latest flagship video and audio generation model from OpenAI. This advanced system represents a significant leap forward in creating physically accurate, realistic, and highly controllable videos. With synchronized dialogue and immersive sound effects, Sora 2 opens up new possibilities for creative expression. You can now explore these capabilities through the newly launched Sora app, designed to make video generation accessible and fun.

The original Sora model, released in February 2024, marked a foundational moment for video generation—akin to the GPT-1 era for language models. It demonstrated early signs of success, with basic capabilities like object permanence emerging from scaled pre-training. Since then, the Sora team has focused on enhancing world simulation, a critical step toward building AI that deeply understands the physical world. Mastering large-scale video data pre-training and post-training remains a key milestone, especially as these techniques are still in their infancy compared to language models.

With Sora 2, we are advancing to what may be considered the GPT-3.5 moment for video. This model excels at generating content that was previously exceptionally difficult or outright impossible for earlier systems. Imagine Olympic gymnastics routines, backflips on paddleboards that accurately model buoyancy and rigidity, or a figure skater performing a triple axel with a cat clinging to her head. These examples highlight Sora 2’s ability to handle complex physical dynamics and intricate scenarios.

Prior video models often took an overoptimistic approach, morphing objects or distorting reality to fulfill text prompts. For instance, if a basketball player missed a shot, the ball might teleport to the hoop. In contrast, Sora 2 adheres more closely to the laws of physics—if a shot is missed, the ball rebounds off the backboard. Interestingly, the model’s occasional “mistakes” often resemble errors made by an internal agent it implicitly models. While not perfect, this ability to simulate failure, not just success, is crucial for any useful world simulator.

Controllability has also seen a major improvement. Sora 2 can follow intricate multi-shot instructions while maintaining consistent world states, excelling in realistic, cinematic, and anime styles. As a general-purpose video-audio system, it generates sophisticated background soundscapes, speech, and sound effects with high realism. For example, it can depict two mountain explorers shouting in the snow, with audio that matches the urgency of the scene.

One of the most exciting features is the ability to inject real-world elements into Sora-generated environments. By observing a short video of a person, animal, or object, the model can insert them into any scene with accurate appearance and voice. This “upload yourself” capability, known as cameos, allows for remarkable personalization. After a one-time recording in the app to verify identity and capture likeness, users can place themselves or friends directly into Sora scenes with impressive fidelity.

To bring these innovations to life, we are launching a new social iOS app called “Sora,” powered by Sora 2. Inside the app, users can create videos, remix each other’s generations, discover content in a customizable feed, and use cameos to enhance social interactions. Early internal testing at OpenAI showed that this feature helped colleagues connect in new ways, reinforcing the app’s potential as a platform for creativity and community.

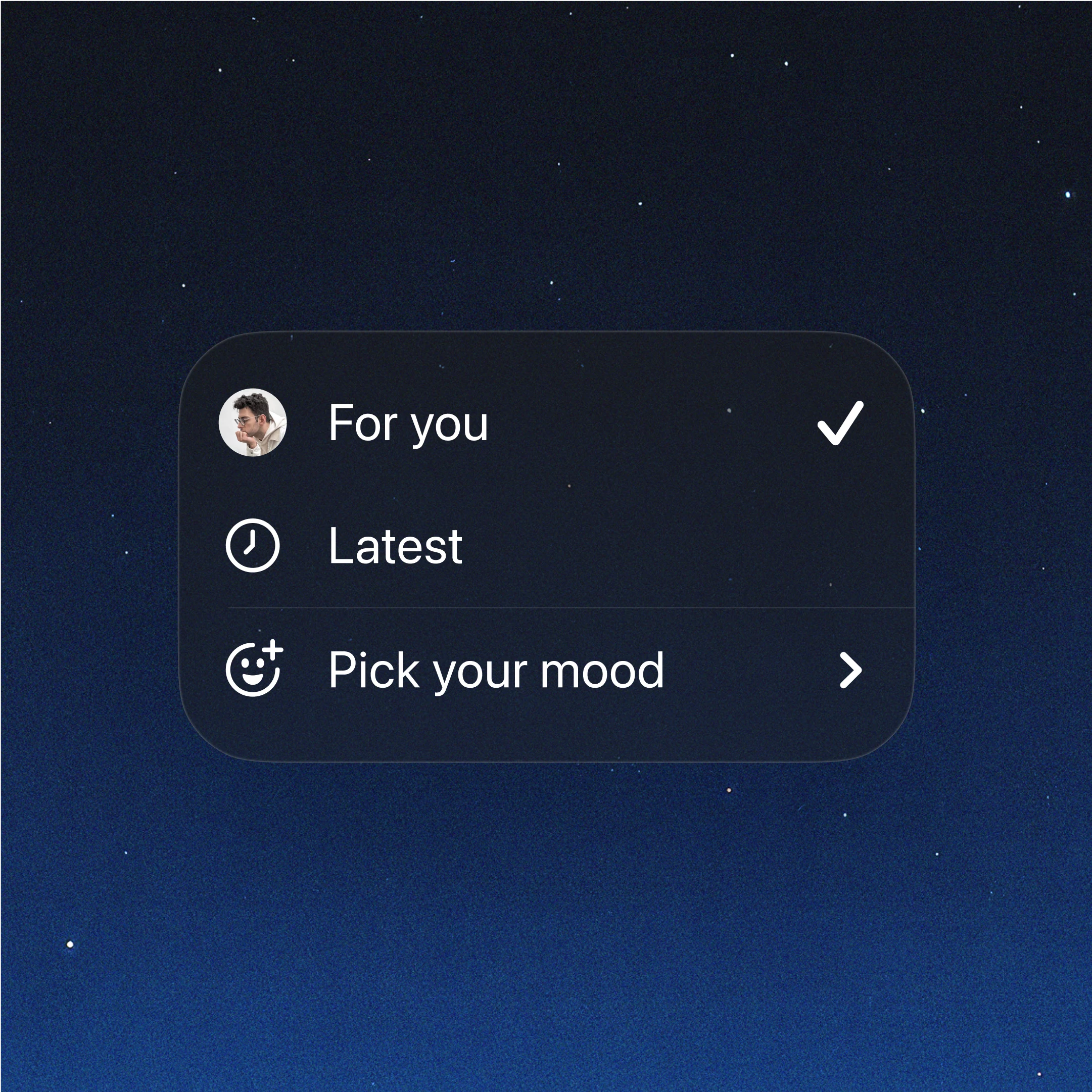

Responsible deployment is a top priority. We address concerns like doomscrolling and addiction by giving users control over their feeds. Leveraging OpenAI’s large language models, we developed recommender algorithms that can be instructed through natural language. The app includes mechanisms to poll users on their wellbeing and adjust feeds proactively. By default, content is biased toward people users follow or interact with, and videos are prioritized for inspiration rather than passive consumption. The app is designed to maximize creation, not consumption, and is built for use with friends.

Protecting teen wellbeing is essential. We have implemented default limits on daily video generations for teens, stricter cameo permissions, and scaled human moderation to address bullying. Parental controls via ChatGPT allow parents to manage settings, including infinite scroll limits and algorithm personalization. With cameos, users retain end-to-end control over their likeness, deciding who can use it and revoking access at any time.

Transparency in monetization is key to aligning with user wellbeing. Currently, our plan is to offer users the option to pay for extra video generation if demand exceeds compute resources. We commit to openly communicating any changes while keeping user wellbeing as the main goal.

Sora 2 is available now through the Sora iOS app, with an initial rollout in the U.S. and Canada and plans for rapid expansion. It will be free to start, with generous limits to encourage exploration. ChatGPT Pro users can access the experimental Sora 2 Pro model on sora.com, and we plan to release Sora 2 in the API. Sora 1 Turbo remains available, and all creations will continue to be stored in users’ sora.com libraries.

Video models are evolving rapidly, and general-purpose world simulators like Sora 2 are set to reshape society and accelerate human progress. We believe Sora will bring joy, creativity, and connection to the world, marking the beginning of a new era for co-creative experiences.